Domain-Driven Design: time for a case study

Today I’m going to make good on my promise: we will finally apply the concepts of Domain-Driven Design to a piece of source code.

I’ve developed a fictional case study that I hope to be both, simple enough to understand, but also realistic enough to be useful.

Let’s begin our exploration by getting to know the protagonists.

Meet the File Crunchers

Picture a successful SaaS company. Now imagine that this company had just launched a new collaboration product to the market. Just after the launch the product had already gained support from a passionate group of customers.

However, the first wave of feedback revealed one gap in functionality: customers would love to be able to upload files to the new product.

The company has swiftly responded to that feedback by spinning up a separate team to add the missing feature. The core team is small, but combines several skills: there’s a product manager, a user experience designer and a few developers.

Given all the attention, the team has felt a lot of pressure to deliver something rather sooner than later. To not keep the customers waiting for too long, the team decided to use an iterative approach: get something valuable out early, then make it better.

Because the team expected to handle a lot of files very soon, they jokingly called themselves “The File Crunchers”.

Coming up with an architecture

Right after the team had formed, the members needed to make a couple of major design decisions:

- How would they best integrate the new feature with the existing product?

- Where would they store all those files?

- What would they need to build and what could they buy?

While the team members tried to answer these questions, they made two observations:

- The team needed to deliver something as soon as possible.

- Moving all those files through the product’s application servers would place too much of a burden on them.

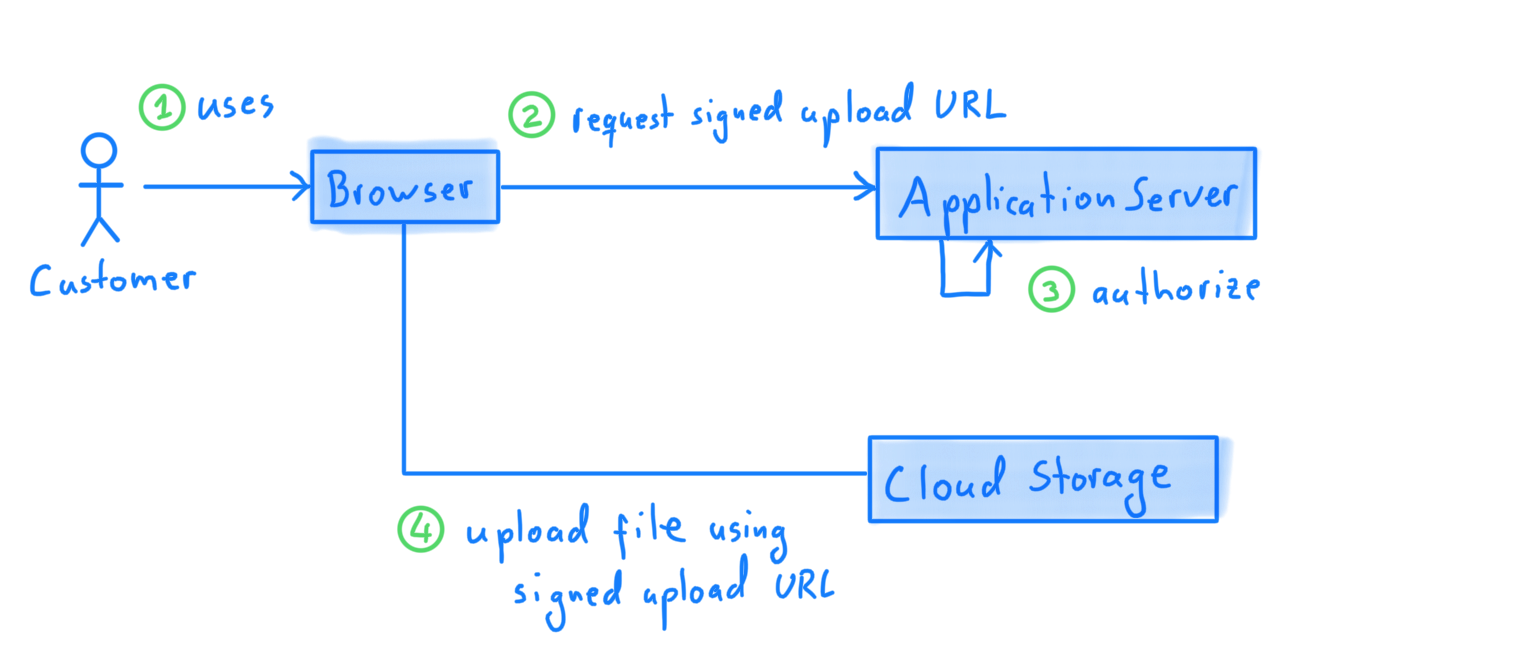

These observations led the team to settle on an architecture. The team decided to integrate with an external provider of cloud storage. Customers would directly upload files to the external provider, thereby bypassing the existing application servers. The application servers would only be responsible for authorizing customers to access the cloud storage.

More specifically, a customer’s browser would request a signed upload url from the application servers. After authorizing the request, the application servers would respond with an upload url. That upload url would only be valid for uploads up to a certain size and eventually expire.

Using the signed upload url, the customer’s browser would then upload the file directly to the cloud storage. Once done, the customer’s browser would then inform the application servers that it had completed the file upload.

Milestones and releases

As I said earlier, the team decided to use an iterative approach. Here’s a breakdown of what the team has released up until now:

Release 1: Signed upload urls

Without the ability to upload files, nothing else would work. So the team decided to simply sign upload urls for every authenticated user that requested it.

Release 2: Audit logging

The security department was really concerned about what customers might upload, so they asked the team to record all the generated urls in an audit log.

Release 3: Observability

The team noticed that the audit log service was not quite as reliable as they hoped it to be, so they decided to add instrumentation around it. This would give them better insights into how big of a problem it really was.

Release 4: Storage limits

From the audit logs, the team could already see that some customers where using the storage feature a lot more than other customers. To prevent excessive bills, they decided to limit the amount of storage a customer could consume.

Release 5: Storage tiers

It became clear that some customers where willing to pay extra for their additional storage needs. The team decided to experiment with an additional storage limit for those customers.

Release 6: Error messages

As customers kept using the service, they occasionally encountered errors. To help addressing these errors, the team decided to add useful error messages to their application.

Show me the code

With all of this context covered, we can now take a look at a piece of code developed by our fictional team.

Although this is only an example, I did my best to recreate the kind of code I see all the time in my career. It is written in Java and neither particularly terrible or delightful. Most importantly, it works and gets the job done.

Before you dive right into code, remember that we want to look at it for a particular reason: to learn about the benefits of a shared mental model expressed in code. Briefly recall the previous article, in which we learned a way to judge the quality of our designs:

If the developers did their job well, then they should pass the following test without effort:

- Invite a product manager or designer to pick a term from the team’s common language.

- Give a developer a few seconds to find that same term in the code.

- Ask the developer to read the relevant code out aloud.

- Look at everyone’s expressions. How much confusion is there?

Code that is a direct representation of the team’s shared mental model should cause little confusion. But if the code is packed with technical jargon and irrelevant detail, you’ll see lots of puzzled faces.

As you go over the next couple of lines, picture yourself in the situation of the developer who has to explain the code to a product manager.

public APIResponse createSignedUploadUrl(APIRequest request) {

String userId = null;

try {

var sessionDetails = sessionStore.lookupSession(request.getSessionId());

if (sessionDetails == null) {

return APIResponse.notAuthenticatedError();

}

userId = sessionDetails.getUserId();

} catch (IOException e) {

return APIResponse.internalServerError();

}

var maxUploadSize = maxUploadSizePerFileInBytes;

if (featureFlagService.useLargerFiles(userId)) {

maxUploadSize *= 2;

}

try {

databaseConnection.setAutoCommitEnabled(false);

databaseConnection.setTransactionIsolation(TRANSACTION_SERIALIZABLE);

var storageQuotaUsed = databaseConnection.queryConsumedStorageQuota(userId);

if (storageQuotaUsed + maxUploadSize <= availableStorageQuota) {

databaseConnection.insertStorageQuotaUsage(userId, maxUploadSize);

databaseConnection.commit();

} else {

databaseConnection.rollback();

return APIResponse.storageQuotaExceeded();

}

} catch (SQLException e) {

return APIResponse.internalServerError();

}

var urlValidUntil = Instant.now().plusSeconds(60);

var signedUrl = signedUrlService.signUrl(urlValidUntil, maxUploadSize);

try {

long auditLogOperationStart = System.nanoTime();

auditLogService.write(userId, signedUrl);

final long responseTime = System.nanoTime() - auditLogOperationStart;

try {

metricsService.responseReceived(writeAuditLogName, responseTime);

} catch (IOException e) {

// ignore

}

} catch (IOException e) {

try {

metricsService.responseNotReceived(writeAuditLogName);

} catch (IOException err) {

// ignore

}

return APIResponse.internalServerError();

}

return APIResponse.successWithUrl(signedUrl);

}

Time for a review

Okay, what would you say about that piece of code?

Did you get a rough idea of what is going on? Could you see how the code has grown organically as more features got added to it? Would you feel comfortable enough to change it and deploy that change to production?

But most importantly: how much does it represent the team’s shared mental model?

Code that is a direct representation of the team’s shared mental model should cause little confusion. But if the code is packed with technical jargon and irrelevant detail, you’ll see lots of puzzled faces

How would you rate that piece of code on a scale from 1 to 10?

It’s probably hard to rate the code if you can’t compare it to something else. So let’s take a look at a different way to implement the same functionality.

Refinement

Before you look at the alternative implementation, I want to assure you that both pieces of code have exactly the same behaviour.

In fact, I gradually changed the previous implementation to this one. I didn’t know what I would arrive at eventually, but I gradually kept refining what was already there. I relied on an extensive suite of automated tests and the refactoring tools provided by my IDE.

Although I only made tiny steps with no clear goal in mind, I was guided by a single question: how could I turn this code into the basis for a shared mental model?

I’m quite pleased with how it has turned out - although there’s still plenty of room for improvements.

But take a look for yourself:

class CreateSignedUploadUrlCommand {

public final static int defaultUploadSize = 1 * 1024 * 1024; // bytes

public final static int availableStorageQuota = defaultUploadSize * 100; // bytes

public final static int uploadUrlLifetime = 60; // seconds

private final CreateSignedUploadUrlDelegate d;

public CreateSignedUploadUrlCommand(CreateSignedUploadUrlDelegate d) {

this.d = d;

}

public String execute(String accountId) {

var maxUploadSize =

d.participatesInIncreasedUploadSizeExperiment(accountId)

? defaultUploadSize * 2

: defaultUploadSize;

var quotaWasConsumed =

d.consumeStorageQuotaIfEnoughAvailable(accountId, maxUploadSize);

var insufficientQuotaForUpload = !quotaWasConsumed;

if (insufficientQuotaForUpload) {

return null;

}

var uploadUrl = d.createSignedUploadUrl(uploadUrlLifetime, maxUploadSize);

d.recordUploadUrlInAuditLog(accountId, uploadUrl);

return uploadUrl;

}

}

Is the second implementation any better?

Have we made any progress towards our goals?

Let’s recall our critera for good design:

Code that is a direct representation of the team’s shared mental model should cause little confusion. But if the code is packed with technical jargon and irrelevant detail, you’ll see lots of puzzled faces.

In that regard, I think we have made a lot of progress. The technical jargon has mostly disappeared. The remaing concepts are now a lot more visible. They can be understood by the entire team, not just by the developers.

The second implementation is not perfect of course. There’s still a lot of room for improvement. But I think we have accomplished our goal for today: to see the benefits of Domain-Driven Design in action.

What’s next?

Over the past couple of weeks we’ve learned a lot about Domain-Driven Design. This case study has allowed us to see some of the most important concepts in action. There’s only one more thing left for us to do: it’s time for a conclusion.